It’s not as if we hadn’t seen this coming. Philosophers have forever been complaining about the dehumanising march of technology, but the amount of grumbling in the last few decades has been understandably reaching a fever pitch – and we should probably start taking notice. In 1973, Ivan Illich, an Austrian Catholic priest-turned-philosopher published Tools for Conviviality, a succinct and brazen takedown of modernity and its corrosive effect on humanity, and a blueprint for how we might live within self-imposed technological limits.

For Illich, the technocratic forces that have separated human work into the vast delta of interdependent specialised channels have been at the expense of our capability as autonomous beings. Illich’s remedy was a return to convivial tools, which he defined as those that are readily available for use by anyone without licence, understandable in their workings, impose no obligation on their use, and allow the user to express themselves with it in personally meaningful ways.

His point was not about Luddite-style protectionism, but about how the incomprehensible nature of advanced tooling and the requirement of specialist knowledge to operate them creates a learned helplessness, and results in the compounding of power to the few that understand. Put another way, when our only interfaces with the world are via tools which we do not and cannot comprehend, we not only cede our agency to the controllers of the tools, we also do dumb shit.

It’s why Steven Schwartz, a New York attorney, almost nuked a 30-year legal career by using ChatGPT to prepare court documents, in which it referenced non-existent cases it had just made up. In his defence Schwartz testified that he assumed the cases were just “hard to find” when he couldn’t locate them on other tools. The fact that a tenured lawyer would happily throw decades of experience out of the window, rather than question the machine he was using, speaks volumes.

A January 2025 study by the Swiss Business School in Zurich, the first of its kind, has begun to put some scientific rigour to the anecdotal evidence, and found a direct link between regular use of AI and the ‘cognitive offloading’ that it entails and a decline in critical thinking, especially among the young. This was followed up a few weeks later by another paper from Microsoft Research that pointed to exactly the same thing.

It does not take a great leap to see what is going to happen when this is planted in the toxic mulch left by the internet of the 2010s. It has taken a decade of debate to reach a consensus that social media and excessive smartphone use have decimated our collective mental wellbeing, especially of the world’s children. Inventions created by adults who had taken for granted what they had gained from an offline youth were unleashed on the world with little thought of the long-term consequences.

Advertising helps fund Big Issue’s mission to end poverty

When combined with the overprotective paranoia of modern parenting, we have an entire generation who, on average, take fewer risks, are more anxious, have reduced interpersonal skills, get less sleep and have lower attention spans. With the promise of technology that can take on the risk of failure and offer an easy way out to any of life’s problems, it is not just we adults that should be worried: We are on the brink of failing the next generation with another stroke of gross technological irresponsibility.

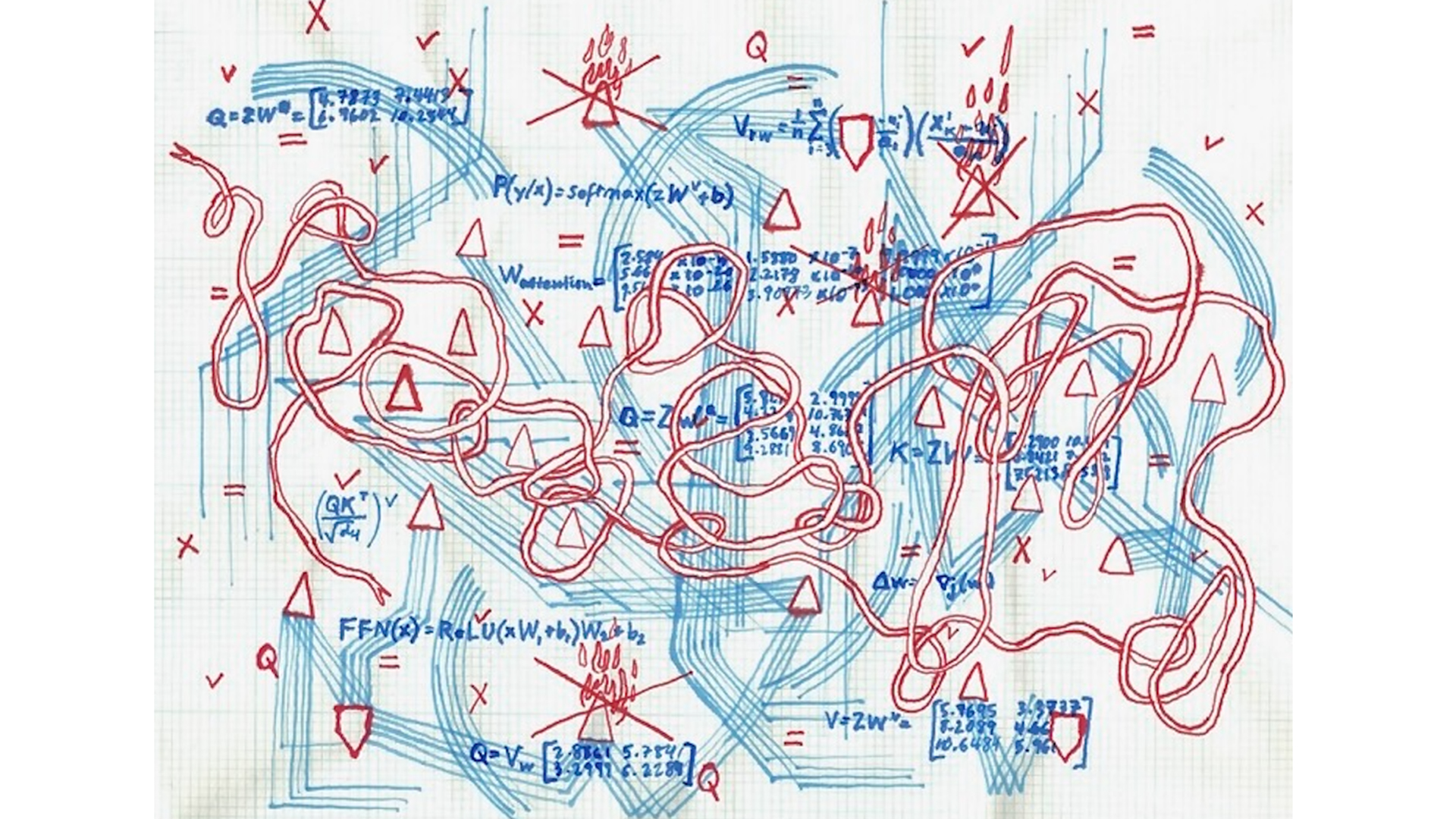

At first, AI might look like a convivial tool. It is available to mostly everyone, it requires no specialist knowledge to use, and it empowers people to create output in sectors otherwise controlled by people with specialised knowledge, but this is mostly a trick. With the new magic that we have in our pockets, we are possibly entering a new era of learned helplessness, in which the ultimate direction is that we become unable to act without guidance from the machine.

We are on the cusp of a future where your calendar fills up with real-world events scheduled by your AI agent. Dates with potential lovers based on a mutual interaction between your respective AIs, with a robot assistant in your ear to guide you through the conversation to maximise the chance of connection; birthday presents with a personalised message automatically mailed to relatives who you barely think about; food and ingredients to cook it stacked up in your grocery basket and delivered to your door based on the nutrition plan agreed with your AI trainer.

With the unprecedented and exponentially accelerating pace of change, through one lens, it is as if we are witnessing the early and awkward birth of what Noah Yuval Harari termed ‘Homo-Deus’ in the 2015 book of the same name, in which Homo sapiens evolve and essentially give way to a man-God machine assimilation.

The pace of change is such that the point at which we think we might reach ‘Artificial General Intelligence’ – a thinking machine that can outperform any human at most tasks – has been rushing closer every year, to the point we think we may now have it by 2027. The huge sums invested and the rapid pace of improvement in both hardware and software that have followed can both be credited, but what is also needed is more data for training, particularly private data.

The first generation of AI systems that have escaped the research labs in the last couple of years have been trained on information scraped from the public internet. Think Wikipedia, Reddit, YouTube, Flickr and hundreds of thousands of publicly available books, papers and songs. In order to preserve the pace of advancement, we will be continually enticed to provide even more about ourselves and our daily lives via cheap and convenient products; but think for a moment. What if we just didn’t?

Advertising helps fund Big Issue’s mission to end poverty

What if instead of fighting our technologically induced overload with more technology (either American or Chinese) we took a step back? What if rather than expecting the answer to be on tap, we embraced inconvenience a little and chose to exercise ourselves? Much like choosing to ride a bike rather than driving. It’s the difference between turning towards a machine to have an answer spat out, versus the gradual, embodied understanding that results from risk, frustration and multiple approaches, with all the doubts, failures and surprises that come along with it.

Much like how you can’t be told what results the practice of meditation or open-water swimming may contain, there is no shortcut, no easy absorption of the innate knowledge that comes from it.

Some of the richest moments of our lives come from engaging with mystery; learning about your own impressions and reactions when encountering unpredictable and strange situations. It is through these messy processes in which we build confidence and trust in ourselves and others, and discover the subjects we want to pursue to greater depths.

Commitment to a sustained practice in which a process is seen from beginning to end creates expertise and shorthand familiarity, like knowing a measurement at a glance or understanding when to check on the bread being baked, and this instinctive knowledge compounds throughout life, enabling us to join the dots between disciplines and concepts and allowing us to invent and create in the first place.

While it’s clear to most people that we should be using AI to cure cancer, reverse climate change and explore space, much of the application of this technology we see in our day to day lives thus far is used for a kind of self-centred advancement which is, honestly, pretty boring. Even when AI makes art – a job we really didn’t need it to do – it falls embarrassingly short because it is essentially bereft of creative risk and is not channelled through any lived experience.

In a recent interview, Mikey Shulman, CEO of AI music company Suno, stated with absolute confidence that people don’t really enjoy making music.

Advertising helps fund Big Issue’s mission to end poverty

“It’s not really enjoyable to make music now,” he says. “It takes a lot of time, it takes a lot of practice, you need to get really good at an instrument or really good at a piece of production software. I think the majority of people don’t enjoy the majority of the time they spend making music.”

The interviewer takes a second to understand what he’s just heard, and then probes with a basic counter: “Do you not think that’s like running? […] It’s hard to run, it is painful to run, you don’t particularly enjoy it but you love running, and you get good at it and you get better at it…” Shulman remains steadfast: “Most people drop out of that pursuit, because it was hard. […] The people who you know that run… that’s a highly biased selection of the population that fell in love with it.”

While Shulman’s generalisation is not untrue – many people drop out of many things because they are hard – we are in danger of sliding into an epidemic of the mind, in which the joy of figuring out a problem and seeing through a task end-to-end is replaced with a shortest-path-how-to for everything. What if, instead, we picture a world in which we don’t need technology to manage more time with technology and more mindless consumption?

We absolutely do not need AI agents dictating where our attention extends or plugging in what our schedule, meals, and relationships should be at the most efficient rate. Our attention, and even the ability to be bored is the rare space where personhood, community-building, critical thinking, and imagination reside.

Let’s practise living and thinking in a way that can leave room for the unexpected, where we can be repetitively charmed by how chance encounters can twist life paths: running into a friend in your grocery store and deciding to make dinner together or going to a party and meeting someone who happened to be in town that might change the course of your life. Ultimately, not having our interior world, or social sphere, mediated by corporations.

Creative living – or thinking for oneself – is an existential freedom worth defending, and it’s the last frontier for profit-hungry corporations. If everyone is waiting for someone else to tell them what the answer is, we can regain some sovereignty in our lives by figuring out our own futures. Now, more than ever, we need visionaries with their own peculiar brand of thinking and imagining, not a scramble of recycled ideas and aesthetics cluelessly sat in the rain with McConaughey, wondering where it all went so wrong.

Advertising helps fund Big Issue’s mission to end poverty

We grew up in a world without the thinking machines, and we had a luxury our children do not. With no other choice but to build our cognitive muscles by trial, error and critical thinking, we owe it to the next generation and to the future of our societies to set a good example and use our brains, while we still can.

Max Leonard is a technologist who is working at the frontier of human data and our right to privacy in the age of surveillance capitalism. In his spare time he tests the boundaries and patience of the security state.

Anne-Marie Litak creates multi-sensory experiences and events that aim to connect us to our humanity.

Do you have a story to tell or opinions to share about this? Get in touch and tell us more. Big Issue exists to give homeless and marginalised people the opportunity to earn an income. To support our work buy a copy of the magazine or get the app from the App Store or Google Play.